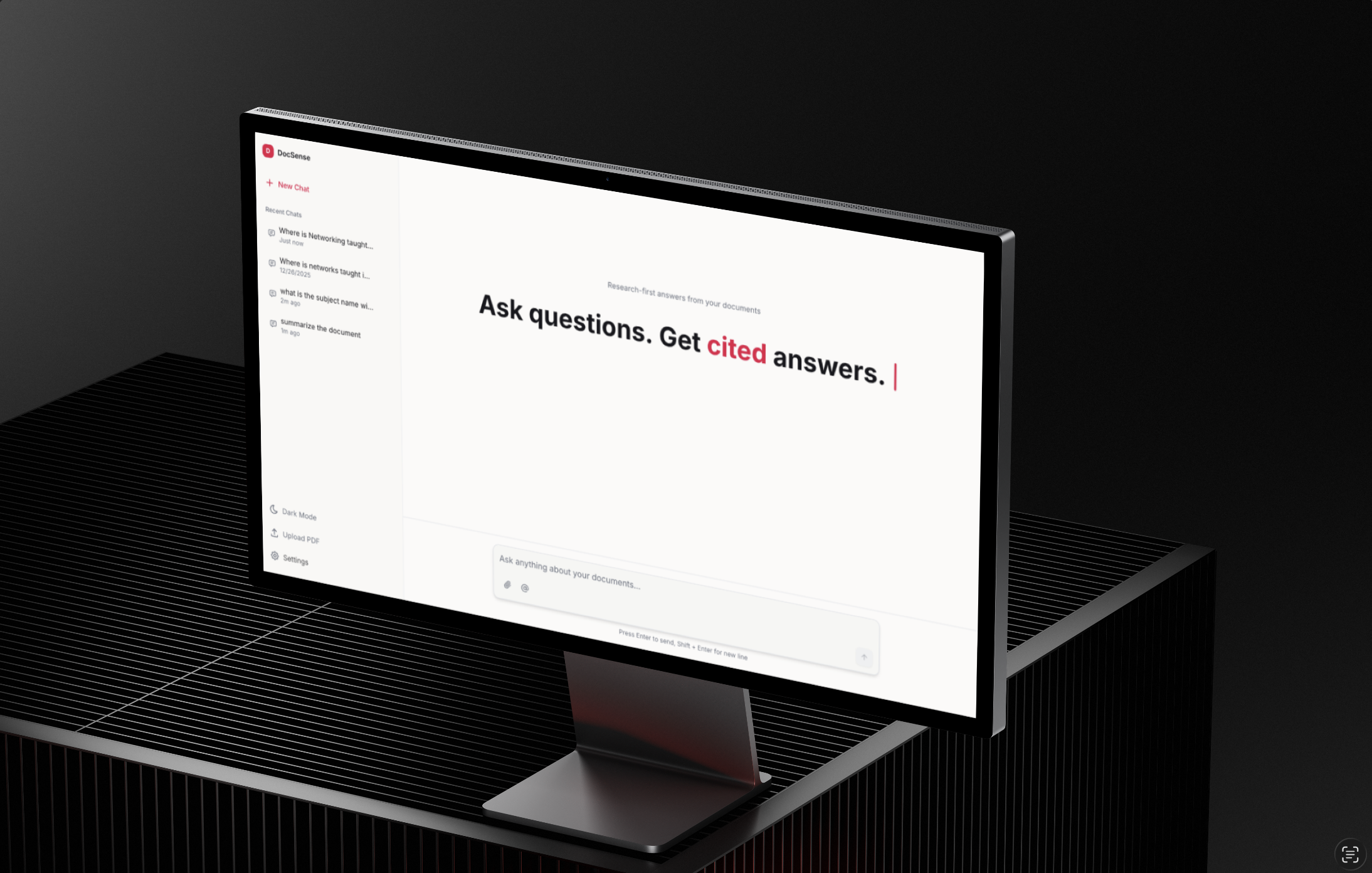

DocSense - AI Research Assistant

Research-first AI application that answers questions from documents with page-level citations

An advanced AI-powered research assistant that enables users to ask natural language questions about their PDF documents and receive precise answers with page-level citations. Features real-time streaming responses, interactive PDF viewer with citation linking, multi-document search, and a sophisticated LangGraph agent for intelligent document retrieval and synthesis.

Tech Stack

The engine behind the experience

OVERVIEW

DocSense is a comprehensive full-stack AI research application designed to transform how users interact with PDF documents. Built with a research-first approach, the platform enables users to upload multiple PDF documents and ask questions in natural language, receiving accurate answers with precise page-level citations that link directly to source material. The system architecture consists of a modern Next.js 15 frontend with React 19, providing a responsive and intuitive user interface with dark/light mode support. The backend is powered by FastAPI and implements a sophisticated LangGraph agent that orchestrates document search, content extraction, and answer synthesis using Google Gemini AI. Key features include real-time Server-Sent Events (SSE) streaming for live response generation, an interactive PDF viewer that automatically navigates to cited pages when citations are clicked, multi-document search capabilities that search across all uploaded PDFs simultaneously, semantic chunking for improved retrieval accuracy, Redis Queue (RQ) for asynchronous job processing to handle long-running queries, comprehensive document management with upload, delete, and metadata tracking, and a Perplexity-style chat interface with reasoning step visibility. The application uses LangGraph to create a multi-node agent workflow that analyzes queries, searches documents using keyword matching and semantic chunking, extracts relevant content from PDF pages, synthesizes comprehensive answers with inline citations, and generates UI blocks for enhanced visualization. The system ensures RAG (Retrieval-Augmented Generation) reliability by implementing fallback mechanisms that guarantee document content is always available for answer generation. Built with production-grade reliability, the application includes Docker Compose configuration for easy deployment, comprehensive error handling, caching mechanisms for efficient PDF processing, and a scalable architecture that separates API, worker, and frontend services. The platform prioritizes accuracy and traceability, ensuring every answer can be verified through direct source citations.